Deep Dive Webflux

In our previous articles, Part 1 and Part 2, we covered how a web application is structured when using Webflux, so let's dive deeper into the behind-the-scenes of how Webflux manages its execution. Often, engineers overlook the understanding of underlying technologies, but in the case of Webflux, this understanding is crucial. Otherwise, it can lead to even bigger issues in the mid-to-long term.

Netty

Traditionally, Spring MVC used the Tomcat server, whereas Webflux utilizes Reactor Netty. Reactor Netty leverages the capabilities of Netty to provide efficient, non-blocking engines for various network protocols. Netty effectively manages HTTP, TCP, and UDP communication for clients and servers, ensuring seamless data flow even under heavy loads. Moreover, the framework incorporates backpressure mechanisms to prevent data overload, thereby promoting stable and reliable network interactions.

EventLoops

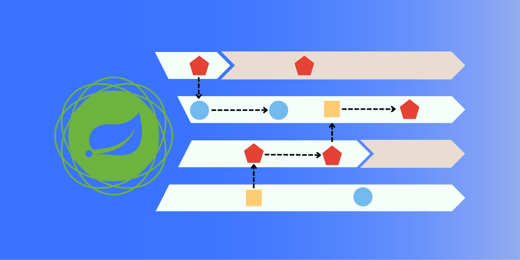

An EventLoop is responsible for performing non-blocking I/O operations, a concept shared with other frameworks like Node.js, for example. In the Java world, this is similar to how a worker thread operates in Spring MVC. Typically, Webflux runs 4 EventLoops at all times, managed by an EventLoopGroup. When a request is received from a Socket Channel, the EventLoopGroup associates it with an EventLoop that handles the processing or delegates it to a thread pool. Once the processing is completed, the EventLoop returns the results to the same Socket Channel where the request was made.

The approach described above outlines how Webflux handles incoming requests, but it's not the most efficient way because the EventLoop remains active throughout its executions. While this method works, it doesn't fully utilize the potential of Netty and asynchronous processing. Instead, we should ensure that when encountering a blocking operation, such as a database or network call, the execution is handed over, and a callback function is registered. This frees the EventLoop to handle other requests or tasks.

Before we proceed, let's quickly review two important concepts: callbacks and Spring Beans.

A callback function is an executable code passed as an argument to another function or piece of code. In Webflux, callbacks are operators used within Monos and Fluxes. These callbacks are functions invoked by operators at specific points during the data stream's processing. For instance, the `doOnNext` operator takes a callback function as an argument. This serves as a good example of how a callback is utilized: once the operations are completed and a Mono or Flux is returned, the function received as a parameter on `doOnNext` will be executed.

Spring Beans are known as the backbone of a Spring Application. They are managed by the Spring IoC container and can appear in six different scopes: singleton, prototype, request, session, application, and Websocket. We won't delve into the details of each scope here, but I strongly recommend referring to the documentation for further explanation. It is important to highlight that in a Spring MVC application, a scope can be bound to a thread; however, in Webflux, this operation is nonexistent as an operation can be executed by multiple threads. To account for this, Webflux introduces a SubscriberContext, which allows contexts to be shared among threads.

The combination of callbacks and context will unlock the full potential of reactive programming. Using a callback function and the context, we enable the EventLoop to perform another task concurrently with the blocking call. Once the callback is executed by another EventLoop, it contains the full context necessary for the pipeline.

A highly efficient way to handle blocking threads is to delegate them to bounded-elastic threads, as they are intended for lightweight blocking operations. This will switch the context and hand over to a new pool of threads. As referenced above, typically, we have four EventLoops, which can be increased but are also computationally expensive. Increasing them might negatively impact the performance of the application.

Bounded-elastic threads are a type of scheduler. Schedulers are classes that define the execution model and different contexts, such as immediate, single, elastic, and parallel. They provide several types of thread pools, each used for various tasks, and enable the application to defer execution to a scheduler anywhere in the chain. The example below demonstrates how a bounded-elastic scheduler can be used.

Mono<String> slowServiceCall() {

return Mono.just(Mono.defer(() -> {

try {

Thread.sleep(2000); // Simulate slow operation (2 seconds)

} catch (InterruptedException e) {

e.printStackTrace();

}

return "Data from blocking call";

}))

.subscribeOn(Schedulers.boundedElastic());

}

Metrics and Instrumentation

We recently encountered a fascinating project where the visibility of Webflux metrics was crucial. This is because projects that typically use Webflux as their framework prioritize performance. If your application does not need high performance, you might want to work with regular Spring MVC instead.

In this article, we won’t dive into the nitty-gritty of dashboard structuring. However, to truly grasp performance and spot any issues, a mix of metrics is your best friend. Think of it like building a panoramic view—from high-level incoming traffic to the intricate details of your infrastructure and application. For instance, tracking metrics for incoming traffic, infrastructure compute, JVM compute, JVM threads, garbage collection, Netty, Reactor, and R2DBC can give you a comprehensive understanding of how your application is performing, all from a single dashboard.

Reactor provides built-in integration with Micrometer via the reactor-core-micrometer module. This allows you to extract the metrics related to your application and integrate them with Prometheus and any dashboard tool that you use. For that, you'll need to include the following dependency on your project.

<dependency>

<groupId>io.projectreactor</groupId>

<artifactId>reactor-core-micrometer</artifactId>

</dependency>

Once that is configured you'll be able to expose different types of metrics.

Scheduler metrics

To enable scheduler metrics, use the following method:

Schedulers.enableMetrics();

If you’re using Spring Boot, then it is a good idea to place the invocation before the SpringApplication.run(Application.class, args) call. Once the configuration is completed, here are some of the metrics provided by Micrometer:

executor_completed_tasks_total (FunctionCounter): The approximate total number of tasks that have completed execution.

executor_active_threads (Gauge): The approximate number of threads that are actively executing tasks.

executor_queued_tasks (Gauge): The approximate number of tasks that are queued for execution.

executor_pool_size_threads (Gauge): The current number of threads in the pool.

Each executor metric includes a reactor_scheduler_id tag, which helps identify the corresponding pool of threads.

Publisher metrics

To add metrics to custom locations, you can use the .metrics() method on your reactive pipeline. Here's an example extracted from Project Reactor documentation.

listenToEvents()

.name("events")

.metrics()

.doOnNext(event -> log.info("Received {}", event))

.delayUntil(this::processEvent)

.retry()

.subscribe();

In this example, the .metrics() method is added to the pipeline to track metrics. You can place it at custom locations in your reactive pipeline to monitor specific parts of your application's behavior. For more details on the metrics, we recommend reading the documentation.

This is the final article in our series on Reactive Programming and Webflux. If you missed the previous articles, check out Part 1 and Part 2. The goal of this article was to assist you at the beginning of your journey to develop a Webflux application. For further information and assistance with your project, feel free to contact us here or email sales@ipponusa.com with any questions.

.png?width=520&height=294&name=Webflux%20image%20(1).png)

Comments