AWS CDK is a tool that allows developers to define their infrastructure as code in popular programming languages, rather than yaml config files.

Using python with AWS CDK and Lambda functions lets you define, build, and test an application from the ground up in a single codebase. Tools within the python ecosystem like black, pylint, and pytest can allow your infrastructure and application to have a unified style, automatic testing, and inline documentation.

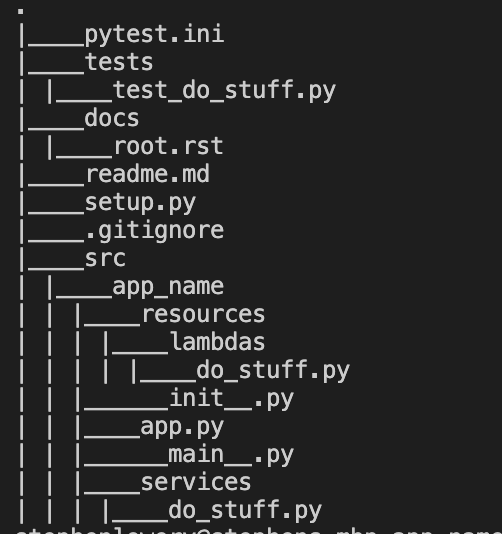

Project Structure

When working with pytest, it's recommended to use a "src" directory, as recommended in this post in ionel's blog. Our project layout then looks like:

In setup.py, we need to point package_dir and packages to our src directory:

# setup.py

""" Project installation configuration """

from setuptools import setup, find_packages

setup(

name="app_name",

version="0.1.1",

packages=find_packages("src"),

package_dir={"": "src"},

author="Author Name",

author_email="author@domain.com",

)

Then, we can install an editable version of our project for development by running:

pip install -e . Now tests in tests/ should be able to reference our application's modules through absolute imports like from app_name import app .

Getting Started with Testing

Setting up tests with pytest is fairly straightforward. in the tests/ toplevel directory, create a file called conftest.py. In the file, be sure to import pytest and add in a simple test:

""" Simple pytest configuration """

import pytest

def test_conf():

""" Assert tests are configured """

assert True

To run our test suite, we can use the pytest command:

pytest tests

=================================================================================== test session starts ===================================================================================

platform darwin -- Python 3.8.2, pytest-5.4.1, py-1.8.1, pluggy-0.13.1

rootdir: /path/to/your/app/tests/

collected 1 item

tests/conf_test.py . [100%]

==================================================================================== 1 passed in 0.01s ====================================================================================

This will run all tests in the tests directory. Add additional tests for modules, functions, and classes and make sure they pass!

As you add tests, you may come across cases where certain functions have side effects, like posting to an API. There's a way to mitigate that- through monkeypatching and dummy objects.

DummyClient- Testing Without Side Effects

To prevent our project from provisioning resources during testing, we created a DummyClient class, with a single method- do_nothing(), and overrode the __getattr__() method to point all undeclared attributes to that method:

# dummy_client.py

class DummyClient(object):

...

def do_nothing(self, *args, return_value=None, return_none=False, **kwargs):

if return_none:

return None

elif return_value is not None:

return return_value

return self.default_return_value

def __getattr__(self, attr):

return self.do_nothing

The do_nothing() method accepts any number of positional and/or keyword arguments, and returns DummyClient.default_return_value unless return_value or return_none are specified in a function call. That means we can have a single client that can "pretend" to run any method (regardless of signature) without additional configuration.

Monkeypatching

To use our dummy client instead of library clients (like requests or boto3), we can take advantage of the monkeypatch fixture in pytest. This allows us to replace the requests or boto3.client objects with instances of our dummy client during particular tests.

# test_lambda.py

from collections import namedtuple

import pytests

import requests

from app_name.resources import DummyClient

from app_name.resources.services.some_lambda import lambda_handler

def test_send_request(monkeypatch):

client = DummyClient('requests.post')

response_class = namedtuple('Response', ['json','body', status_code])

client.default_return_value = response_class(

json={'foo':1},

body='{"foo": 1}',

status_code=200,

)

monkeypatch.setattr(requests, 'post', client.post)

response = lambda_handler(event={}, context=object())

assert response.get('status_code') == 200

With this patch, the post method is never invoked, and instead our DummyClient.do_nothing method. The return value here is a named tuple, rather than a requests.Response, but our application code only needs to now that there's a status_code and body attribute.

Leveraging pytest Fixtures

One common task for a lambda function is to use boto3 to fetch some data about a resource, do some transformations to it, then send the new data to another service or resource. Many services will receive events from the same sources (e.g. s3, SQS, EventBridge, etc.), and those events will share a common schema. If we move our events from being tied directly to a specific test into a common event store, we can reduce redundancy in our testing suite:

// events.json

{

"events": {

"empty_event": {

"event": {},

"metadata": {},

},

"200_ok": {

"event": {

"statusCode": 200,

"body": "some content"

},

"metadata": {}

},

... more events ...

}We refactored our tests to include tests/events.json , a file that storing sample events from each source of data, as well as some generic events (empty event, 500 response, etc.). To get those events passed to tests, we utilize a project-scoped fixture in conftest.py that loads all test events into memory at the start of testing. We can then utilize pytest's parameterize to specify which events we want to test. While we're at it, we can start using a fixture for the "context" object that AWS passes to each lambda:

# conftest.py

import json

import pytest

with open('events.json', 'r') as f:

event_dict = json.loads(f.read())

@pytest.fixture

def events():

return event_dict['events']

@pytest.fixture

def context():

return object()

# test_handler.py

import pytest

from app_name.resources.some_lambda import lambda_handler

@pytest.mark.parametrize('event_name', ['empty_event', '200_ok'])

def test_handler(events, event_name, context):

event = event[event_name]['event']

expected_response = event[event_name]['metadata']['expected_response']

response = lambda_handler(event, context)

# should get a 200 response

assert response.get('statusCode') == 200

# should get a response body that isn't empty

assert response.get('body')

This lets us easily test a function with a number of different events without duplicating logic across tests- adding a new event to a test is as easy as adding its name to the parametrize list argument.

Conclusion

Leveraging features of pytest, we can test not only our function code, but the infrastructure itself, all in one code repository. By testing your entire stack with the same codebase, you can ensure your application is well maintained, that infrastructure is deployed correctly, and that your configuration and codebase are documented in the same place. ##

Comments