Breaking the Stack: Rethinking Tenant Isolation in your Cloud Migration - Part 1

Part 1 of 3 - The Silo Tenant Isolation Model

Welcome to part 1 of 3 of “Breaking the Stack: Rethinking Tenant Isolation in Your Cloud Migration.” In this series, we will touch on all the different tenant isolation models (silo, bridge, and pool), but in this first part, we are looking only at the silo model.

There are many different driving factors behind any organization's desire to move from on-premise to the cloud. These driving factors are covered deeply all over the web, including in blogs that I have written and webinars that I have spoken on. Today, I want to look at something a little bit beyond the decision to move to the cloud and zoom in on an often overlooked challenge: Changes to an application's Tenant Isolation Model when performing a migration. Once the business decision has been made to move from an on-premise hypervisor like VMware or even bare metal to a cloud environment, like AWS, it’s pertinent to take a look at the strategy. We won’t cover the actual migration strategy in this blog, but rather discuss how the isolation strategy can impact your target architecture, compliance, performance, and cost, during and after the migration. We will also look at some key differences between the two operating environments. At the very end of the series, we will consider how the tenant isolation strategy can impact your AI maturity path.

First, let’s get something out of the way… What does Tenant Isolation Strategy even mean? Is it a buzzword? Is it fancy cloud lingo?? Actually, it’s a set of terms and definitions that architects use to describe how resources, data, and application components are separated or shared between tenants in a multi-tenant system. A tenant is a distinct customer, user group, or organizational unit that uses a shared software system while maintaining a logical separation of data, configuration, and access. Alright friends, let’s put on our thinking caps, because it’s time to get technical!

Legacy Architecture and Isolation Assumptions

In the “old days”, okay, like maybe only 15 years or so ago, most apps were effectively in a “silo model” tenant isolation architecture by default. This means each customer or internal department had their own VMs, their own database instances, etc… The hypervisors of this era, such as VMWare, made this relatively easy and manageable when hosting in a data center or premise (or even in your closet!). The details of these siloed architectures are super important when designing your lift and shift, lift and tinker and shift, or replatform initiatives. Questions such as: “Does each client have their own database instance, or their own logical database on an instance”? Or “Do clients use remote access software to access a dedicated app server, or a session host that is brokered and shared by many tenants?”.

These questions may seem like they don’t matter if you are performing a lift-and-shift migration, but if you are moving VMs hosted on VMware to something like EC2, then it actually becomes very important. The primary reason behind this is cost. Having 300 VMs running on a hypervisor that spans 6 nodes is much more cost-effective than having 300 EC2 machines… Similarly, hosting 100 database instances with one tenant each will be extremely costly compared to a single large database instance with 100 logical databases.

Cloud Migration Forces a Rethink in Architecture

When the engineers of later years set out to build the client-server applications that would run on a hypervisor, they had several components to work with from an infrastructure and hosting standpoint. Below, I will go into a bit more detail about these components, their AWS cloud counterparts, and how they may impact your tenant isolation strategy. I will also touch on other parts of your organization that may be impacted by a shift in operating paradigms.

Hypervisory Layer

This layer manages virtual machines on physical servers. If you were running VMware, this was likely called VMware ESXi. It provides CPU, memory, storage, and network isolation. This layer abstracts the hardware for each VM, providing an easy-to-use one-stop solution for managing those VMs. When compared to Cloud Native counterparts, such as EC2 on AWS Cloud, there aren’t too many “fundamental differences”. The AWS modern-day counterpart to VMware ESXi is called AWS Nitro System. It handles virtualization and isolation at scale, but with improved performance and security.

The main considerations for this layer when lifting and shifting applications from VMware to EC2 are how infrastructure and network engineers work with infrastructure on a day-to-day basis. Although these systems provide similar functionality, the learning curve to move from one to another is still very steep. The nice part is that on a typical VMware setup, you would usually pay a license fee for several nodes, which you then run your VMs on top of. The cost is relatively consistent, but you likely end up with more compute than you need. When using EC2, after you have trained and upskilled your team, you can right-size the fleet of machines more appropriately.

Virtual Networking

The virtual networking component of a hypervisor (e.g., vSwitches, VLANs, etc.) allows VMs to communicate with each other and the outside world via software-defined networking. This functionality allows you to provide tenant-level network segmentation. Compared to AWS Cloud, the equivalent offering is Amazon VPC (Virtual Private Cloud). This also enables software-defined networking with features like subnets, security groups, NACLs, and transit gateways, to name a few. The primary difference here is that in AWS Cloud, when compared to VMWare, the level of control offered is much more fine-grained and scalable.

Virtual Storage

The virtual storage component of a hypervisor like VMware (e.g., VMFS, NFS, iSCSI, etc.) abstracts disk/storage access from physical hardware to logical volumes assigned to each VM. When compared to AWS Cloud, this is where things start to change pretty dramatically. AWS Cloud’s most similar offering would be Amazon EBS (Elastic Block Storage). AWS also has Amazon S3 (object storage) to replace more traditional storage layers and offers more targeted managed storage controllers, like EFS (Elastic File System) or FSx (with Net App OnTap, or Windows file system variants).

Management & Monitoring

Management and Monitoring in a hypervisor like VMware would be controlled by something like vCenter. This is a centralized interface for provisioning, monitoring, and managing the virtual environment. The AWS Cloud-native equivalent is called AWS Management Console. AWS Cloud also provides CloudWatch, CloudTrail, Trusted Advisor, and Systems Manager to handle monitoring, automation, and governance across accounts and regions.

Identity & Access Management

When working with a hypervisor, this piece of the puzzle is typically handled by an Active Directory integration in vSphere. This allows controls on who can access what in the virtual environment. When compared to AWS, we have AWS IAM (identity and access management), AWS SSO, and IAM Identity Center, offering federation, role management, and fine-grained permissions control.

Tenancy Isolation Models: The Silo Model

Okay, finally, with the above various components fresh in our brain and the idea that we will be migrating client server applications from a hypervisor like VMWare to a cloud such as AWS Cloud, let’s dive into the different tenant isolation models that are available to us and what considerations to make when planning your migration.

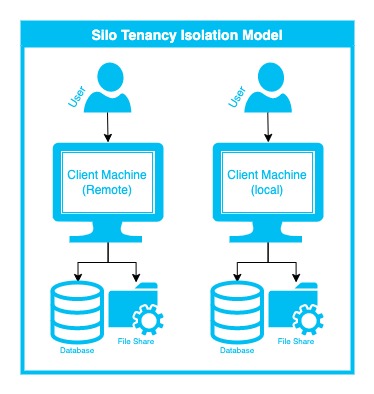

As previously mentioned, this is the tenant isolation model, where each client, user group, or business unit has its own dedicated infrastructure. Let’s take a look at an example with some diagrams.

Diagram 1

To better illustrate this example to the user, let’s consider a client-server application that is hosted on VMware. These are the key indicators that the silo tenant isolation model is in use.

- The end user launches the application from their local machine (native app) or their remote desktop into a remote environment, using something like Microsoft Remote Desktop Services to access a machine, then they launch the application, typically a native client.

- That client then communicates directly with a database, and potentially, a file share.

- The database serves only this single user, or perhaps a pool of users that have some type of organization or business unit behind them. The database can be a logical database that is hosted on a database instance (like SQL Server) that hosts many other databases, or it can be its own dedicated database server.

- The file share is either its own dedicated server or its own dedicated directory on a file share server.

You may be asking yourself, “When a user launches that application on their local machine or remote machine, how does the client know which backend to go to?” Or, “How does the routing from the user login and client know that it needs to take this network path and access this database?” This information often becomes the “touch points” you need to understand when migrating a workload such as this. It is also worth pointing out that many organizations will have devised custom routing mechanisms to achieve isolation. This could be a shared database or database table that hands off additional credentials to the client based on the user that initiated login, or it could be routing rules inside one of those Gateway Servers that are a part of the Microsoft remote desktop services deployment, or it could be DNS entries, providing each tenant their subdomain like tenant1.mycompanydomain.com.

Even if you do not plan on changing the tenancy isolation model in use when performing a migration, it is important to understand how isolation is achieved. This will help inform your target infrastructure and allow you to clean things up if any best practices are being violated. Some examples of issues to watch out for include: hardcoded IP addresses and hostnames, file share paths within the database, or credentials stored insecurely in plaintext configuration files or database tables.

While planning and designing the migration, you may also discover that replicating the exact setup, dedicated VMs, DBs, and file shares, will have a much higher cost in the cloud. If this is the case for your workload, it may be worth exploring ways to change the tenancy isolation model. By pooling and sharing resources, we can reduce costs and operational overhead. One example could be replacing the Microsoft Remote Desktop Services stack with AWS Workspaces or AWS App Stream. You could even explore more ad hoc solutions for remote desktop emulation, such as Apache Guacamole or TSPlus. While you are at it, wouldn’t all file share servers be better off just dying? Consider combining all the file shares into one massive AWS FSx for Windows managed file share service, or AWS Elastic File System (EFS), and if possible, try re-architecting the application to use object storage instead (S3 is much cheaper!).

Applications using the silo tenancy isolation model offer the migration flexibility of moving one tenant's isolated environment at a time. While the desirability of this approach varies based on several factors that will be discussed elsewhere, it's important to recognize this as a potential migration strategy.

Conclusion

This concludes Part 1 of the Breaking the Stack Series. In this blog, we took a look at some legacy architecture assumptions, considered how cloud migrations allow us to rethink architecture and tenancy isolation, and also reviewed the different pieces of the legacy data center stack (specifically VMware) that we may have to contend with. Finally, we jumped into the first of three of our isolation models, the silo isolation model. Check back frequently for Part 2 of 3, where we will cover the bridge isolation model.

.png?width=520&height=294&name=Ippon%20(2).png)

Comments