JHipster is able to generate Kubernetes deployment descriptors for the applications it generates. It recently started offering to add Istio specific configuration in these descriptors.

After a brief introduction to Istio, the currently most known ServiceMesh, we will see that the microservice architectures associated with these deployments can be very different depending on the generation options chosen, and we will study their associated characteristics.

Quick introduction to Istio

Istio is a ServiceMesh completely integrated with Kubernetes.

Without going into too many details, which is not the purpose of this post, its role is to manage all the communications between the services within your microservice architecture.

This allows it to manage the following aspects in particular:

- smart load-balancing between different instances of the services (including circuit breaking and retry aspects)

- blue/green deployment

- canary testing or A/B testing between two versions of a service

- monitoring/observability of communication flows and services

- security in inter-application calls

The important point to understand for this post is that it is able to replace most of tools that are provided by the Netflix stack, such as: Ribbon, Hystrix, part of Zuul and Eureka (when used on Kubernetes by benefiting directly from the discovery service the platform provides)

You can find interesting resources on the subject here:

- Pattern: Service Mesh (explaining the general concept of a service mesh)

- What is Istio?

We'll talk a little more about how it works later in this post.

Reminders about the classical microservice architecture generated by JHipster

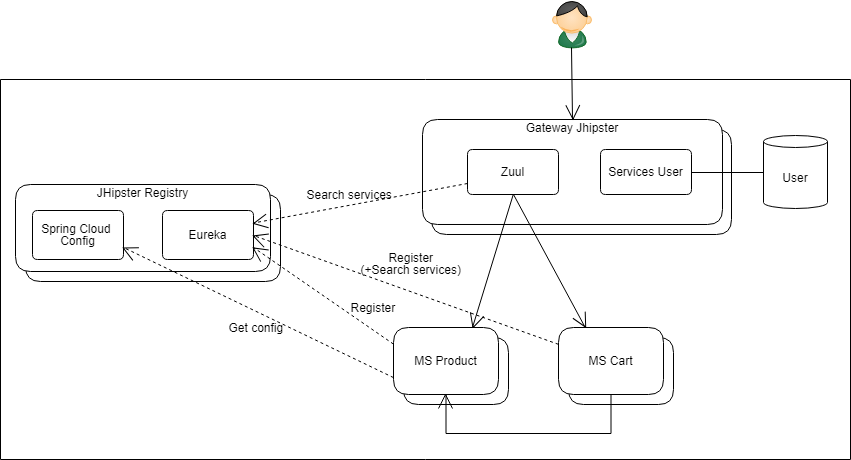

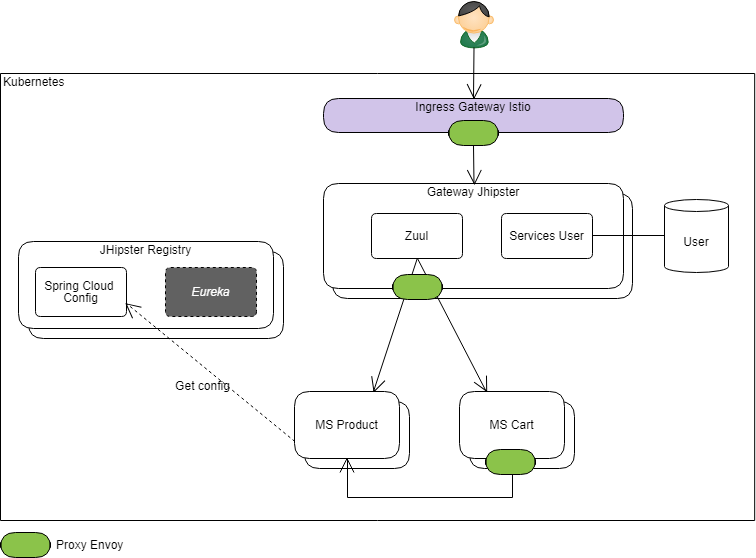

Here is the classic architecture that JHipster generates in the common case:

In this architecture, JHipster relies heavily on Spring Cloud and in particular Spring Cloud Netflix:

- The JHipster Registry hosts:

- an Eureka server (service registry)

- and a Spring Cloud Config server (which can centralize application configuration)

- each instance of microservice:

- gets centralized configuration from Spring Cloud Config

- registers itself (with its default IP) with Eureka.

- in the frontend of the architecture, the JHipster gateway:

- handles the routing of HTTP requests to the different generated microservices based on the context path of the URLs. For this, it uses Zuul (and Ribbon) plugged on Eureka to dynamically discover the location of available services

- it also hosts the Web Application (Angular or React) as well as some core services such as the user repository (depending on the generation option)

- each microservice can benefit from Eureka and Ribbon to discover and call other microservices of the architecture

- Hystrix ensures the robustness of all these calls (GW-> MS and MS-> MS): ensuring in particular the circuit breaking function

This architecture works well on a "vanilla" Kubernetes cluster ("without Istio"): the JHipster generators ensuring the Kubernetes descriptor contains the necessary configuration for deploying the JHipster Registry in high availability (several replicas being created via a StatefulSet).

The typical architecture of JHipster on Kubernetes with Istio

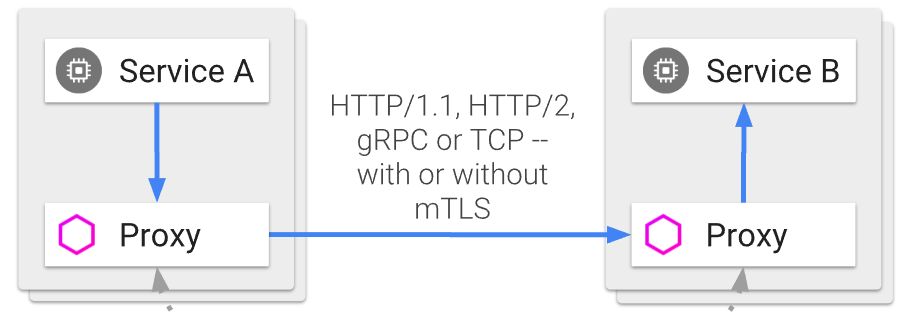

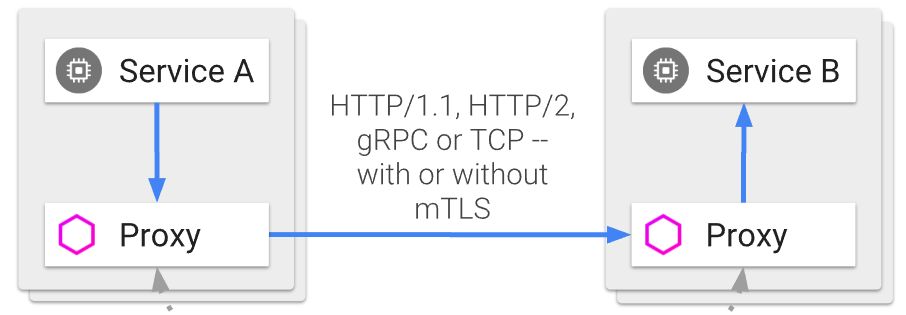

The operating principle of Istio is based on the injection of a proxy (this proxy is Envoy) within each Pod. (Consequently: every Pod will host an instance of a given microservice and its co-located Envoy proxy).

All incoming and outgoing network connections on each Pod are routed through that proxy (via iptable rules) which is then able to apply calling and routing policies independently of the hosted microservice.

(from https://istio.io/docs/concepts/what-is-istio/arch.svg)

This proxy handles the routing of each call to the instances of the right microservice. This routing can be done according to various criteria, most of the time via a mapping of the host of the URL to a particular service type (but the path of the URL or even the HTTP headers can also be used).

For example, within a Kubernetes cluster in which an Istio VirtualService named "productservice" would have been defined and associated with a Product microservice: the Cart microservice (using the samples of the schemas of this post) only needs to call a URL of the type: http://productservice/catalogs/112 and rely on Istio to route this call to an available instance of the Product service (by handling itself aspects such as: timeout, circuit breaking and retry).

This partly enters in competition with the typical JHipster architecture behavior in which these aspects are managed by the Spring Cloud ecosystem. However, the generator is smart enough to allow both mechanisms to coexist when asked to deploy this architecture on Istio:

The main adaptation is to modify the registration mode of microservices in Eureka:

- The generated Kubernetes descriptor sets appropriate environment variables to disable IP registration in Eureka and replace it with registration by name.

- For Eureka, this name is considered to be the hostname of the machine hosting the service. Here it will be filled with the name of the Istio VirtualService associated with the microservice being deployed ("productservice" in my example).

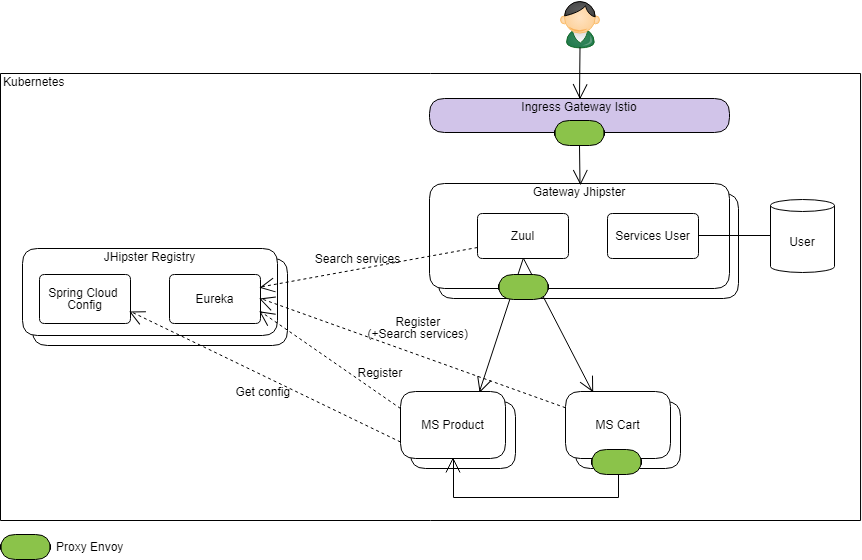

In the end, we obtain an equivalent architecture:

Envoy proxies have been represented in green on outgoing calls (but they also capture incoming calls). The Istio gateway has also been represented on the Ingress (whose role is to route external calls to the cluster to the right services).

Please note that here each instance of a given microservice registers itself with Eureka with the same hostname ("productservice" in my example above for the MS Product). If there are 2 replicas, Ribbon will have the choice between two instances: "http://productservice/" and "http://productservice/"... It's pretty obvious here that it will not really play its loadbalancer role since in the end, it will be the Envoy proxy that will decide to route "http://productservice/" to either replica of the Product microservice.

For inter-service calls (Cart to Product in the schema), it's the same behavior:

- if the developer uses the standard mechanisms made available by JHipster: Eureka and Ribbon will be put to contribution but it's the Istio proxy who will have the last word on the load balancing

- The use of a simple HTTP client will have the same overall effect (taking into account the additionnal remarks below).

It should be noted, however, that the presence of Ribbon and Hystrix can have side effects:

- Ribbon retry mechanisms and those of Istio will add up:

it is desirable to activate only one of the two mechanisms for more control (by default JHipster does not activate Ribbon's retry mechanism, but Istio's retry mechanism is activated in the generated descriptors). - Hystrix (and/or Feign) and Istio timeouts can also compete:

and you have to configure them in a consistent way: you probably don't want the Hystrix timeout to be triggered before the Istio timeout (or during the retry configured in Istio).

(Tackling complexity in the heart of Spring Cloud Feign errors is a good source of information for the configuration of Hystrix in a Spring Cloud environment, especially the calculation of timeouts in the presence of retry. It will also be useful to ask yourself the right questions.)

The Istio-native architecture

The previous configuration has the merit of allowing to execute the application without major changes, but it does not completely embrace the mechanisms of Istio and their advantages on the simplification of the application code (and its configuration).

Ray Tsang (@saturnism) of Google, who is the initiator of the work on Istio in JHipster, has meanwhile tried to ensure to generate an architecture more adapted to Istio.

In the current state, the change of architecture is however rather significant:

- it actually consists of completely disabling the concept of Service Discovery when generating the JHipster gateway and microservices:

Zuul configurations and Eureka clients are no longer generated in the Jhipster gateway and microservices. - with the main effect that the JHispter Gateway can no longer forward any call to microservices.

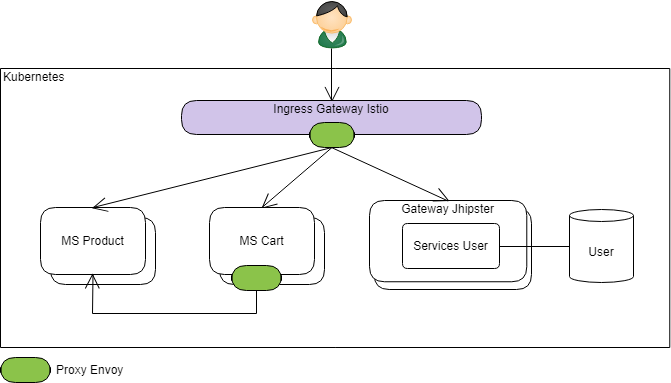

When generating Kubernetes/Istio descriptors, JHipster takes into account the fact that the application elements were not generated with the service discovery option and adapts accordingly:

- on the Istio gateway (the entry point from the outside world), a route is created to access each microservice directly (based on the URL's contextPath)

- the "JHipster Gateway" takes the same place as any of the other services and only hosts the web application and core services such as the user repository.

The architecture looks like this:

Deepu K Sasidharan (@deepu105), one of the main contributor of JHipster, has also illustrated the architectural impact of the use of Istio in this article: JHipster microserviceswith Istio service mesh on Kubernetes.

Here, no more Netflix stack: external calls are routed directly to the right service (while benefiting from the circuit breaking and retry) from the ingress and inter-service calls need a HTTP client to be performed (again benefiting from Istio services).

To be more precise, we can of course keep Feign (originally created by Netflix) to make these calls, but Hystrix, Ribbon and Eureka are no longer necessary in the general case (in some cases, Hystrix can still provide some services). The interested reader can read this comparison between Envoy and Hystrix from Christian Posta (@christianposta).

This architecture, however, has two main impacts:

- the JHipster gateway no longer serves as an application gateway (despite its name)

- removal of the Service Discovery function has completely erased the JHipster Registry from the landscape, and Spring Cloud Config with it.

The JHipster gateway as an application gateway has several roles to play in a microservice architecture: the routing of calls to the microservices is only one of these roles.

Depending on the needs, an application gateway must be able:

- to filter some headers

- to manage CORS headers

- to manage throttling (see the implementation of RateLimitingFilter provided by JHipster)

- to manage an authentication context

Note: the "JWT" authentication mode generated by JHipster works well here but the other modes (including UAA, which is also good because it remains stateless) will need the gateway. The integration of a more powerful JWT authentication (with refresh in particular) will also often need a gateway. - to apply transverse security rules

- ...

Different strategies can be used to handle all of these aspects, but an application gateway is still the easiest way to host them centrally.

Depending on the needs, the two points above can be acceptable but I propose below an architecture that is coherent with Istio while still preserving the additional features of the classic JHipster architecture.

An ideal architecture?

Disclaimer: as of today, JHipster does not know (yet?) how to generate this architecture directly. It can be seen below that this would probably involve making specific changes to the generated code of the gateway that would only make sense for this particular case.

The goal here is to put the JHipster gateway in front of the microservices and possibly to keep Spring Cloud Config in the landscape.

The main adaptation required on JHipster's "standard" code is the adaptation of call delegation to microservices from the JHipster Gateway:

- Routing to the right microservices remains a responsibility that we want to delegate to the Istio infrastructure: the Jhipster gateway will simply have to call a virtual service configured at Istio level to perform the routing:

it is almost the same routing configuration as for the Istio gateway of the previous architecture

Example:

- The gateway receives a request: http://gatewayjhipster/product/xxxx,

- after having applied the appropriate filters (security, throttling, etc ...), it must simply call a URL of the following form: http://servicerouter/product/xxxx

- at the Istio level, a "VirtualService" named "servicerouter" will have been defined. According to the contextPath (here "/product" or "/cart"), it will be able to route the calls to an instance of Product Microservice (or respectively of the Cart microservice).

In the illustration above, I chose to keep Zuul in the Gateway JHipster to continue to benefit from the custom filters provided by Spring Cloud (in Spring Cloud Security for example) or JHipster such as the RateLimitingFilter

- However, the standard Zuul routing filter must be reimplemented/reconfigured in order to meet the strategy described above.

- in particulier, we don't want to integrate Hystrix (or Ribbon) in the configuration of this Zuul server

(because as noted above, it enters into competition with Istio and their configuration might not be consistent)

Of course, if the filters in question are not necessary, Zuul can be simply deleted, and replaced with a simple proxy component that merely delegates HTTP calls to URLs of the form http://servicerouter/xxxx.

Conclusion

The microservice architecture of JHipster is based on Spring Cloud and in particular on the Netflix stack (although alternatives such as Consul and Traefik are also available), and that totally makes sense. It is therefore perfectly normal that this architecture applied as is on Istio shows some limitations.

As I described at the end of this post, I think that the essence of this architecture can remain valid even hosted on Istio. However, this would require modifying the generated code on the JHipster gateway to explicitly handle this particular case.

It's difficult to make everyone happy, JHipster already offers a very rich generation combination and therefore is complex to maintain. So in its current state, the interested reader will have to implement himself the strategy described above.

Last but not least, version 1.0 of Istio was released at the end of July 2018. It is a very promising technology but remains young and little known. Spring Cloud, especially in a context where it is well understood, still responds very well to most issues.

Comments